Oculus Connect is Oculus’s annual virtual reality developer conference, where augmented reality and VR developers and creators from around the world come together to collaborate and share ideas to push the VR industry forward. At this year’s conference, in addition to introducing Oculus Quest, the first all-in-one gaming system built for VR, developers and engineers shared deep dives into the technical challenges they’ve been working on and how their solutions will affect the future of VR.

Below are summaries and videos of some of the technical talks from this year’s Oculus Connect. To see all the sessions from OC5, visit the Oculus Connect website.

Bootstrapping social VR

John Bartkiw, Engineering Manager, Oculus

The best way to get the real feeling of presence in VR is to share an experience with someone else. In this session, John debunks the myth about how challenging it is to build a multiplayer VR experience. He walks through a Unity sample project step by step to help you build with invites and matchmaking, coordinated app launch and avatars, and VoIP and P2P networking.

Unlocking the future of rendering in VR with Scriptable Render Pipeline (SRP)

Tony Parisi, Head of AR/VR Brand Solution, Unity

Brad Weiters, AR/VR Technical Project Manager, Unity

Unity’s SRP allows developers a greater degree of control and customizability. Tony and Brad introduce the new High Definition Render Pipeline and discuss the challenges of rendering objects in VR, including the demand for a high, consistent frame rate and resolution, and the need to keep the experience consistent across two screens. The level of customization available with SRPs lets developers keep their render pipeline lean, maximizing for the assets they want and stripping out resource-intensive parts that aren’t necessary.

Reinforcing mobile performance with RenderDoc

Remi Palandri, Software Engineer, Oculus

RenderDoc is a debugging tool run on PC and used by developers to show exactly what their application is doing. In this talk, Remi explains how it can be used to fix issues and optimize performance for apps running on Oculus Rift and Oculus Quest. He discusses how Ocean Rift, an experience about exploring the ocean, and Epic Roller Coasters, a multiplayer roller coaster racing game, maximize their performance by creatively limiting the textures and geometry they have to render.

Using deep learning to create interactive actors for VR

Kevin He, Founder/CEO, DeepMotion

Interactive motion describes the ability of an actor to move and react to stimulation in a flexible, intelligent way. In this presentation, Kevin talks about the importance of interactive motion in VR and how his team used deep learning to create actors that could respond to their environments. By running the experiments thousands of times, Kevin and his team were able to generate actors who could run, react to avoid obstacles, and perform motions without being shown a reference to mimic.

Oculus Lipsync SDK: Audio to facial animation

Elif Albuz, Engineering Manager, Oculus

Accurately depicting how people speak is an important step in creating believable, immersive avatars. In this presentation, Elif announces the 1.30 release of Oculus Lipsync, an SDK aimed at allowing developers to better animate speech. This new release features integration across Windows, MacOS, and Android; the ability to generate animations both online and offline; and a laughter detection tool. Developers working on applications for Oculus Go will also have access to DSP acceleration, which uses deep learning models to free up computing power on the headset’s CPU.

Sound design for Oculus Quest

Tom Smurdon, Audio Design Manager, Oculus

Pete Stirling, Audio Engineering Lead, Oculus

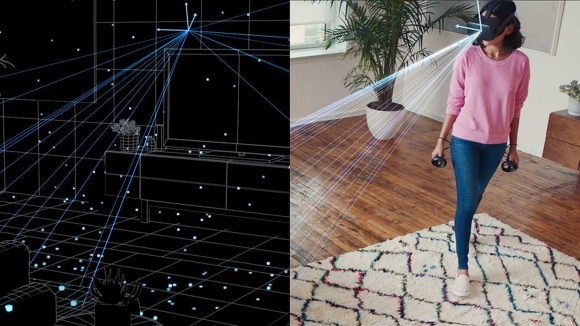

Tom and Pete discuss how the Oculus Quest handles multiple sounds and voices at the same time, as well as sound across variable gamespaces. Engineers built a demo designed to test Oculus Quest’s audio by sending the maximum number of individual sounds the hardware could produce — 64 — to the headset with no negative performance impact. They also discuss the challenge of sounds from the game world not matching the player’s real space and the need to have the headset map the player’s room and adjust the sound to behave accordingly.

Advances in the Oculus Rift PC SDK

Volga Aksoy, Software Engineer, Oculus

Dean Beelor, Software Engineer, Oculus

In this presentation, Volga and Dean discuss the advancements made in the most recent releases of the Oculus Rift PC SDK and core runtime. Among these are adaptive compositor kickoff, a process to keep the compositor from dropping frames and reducing latency, as well as new processes for high precision dithering, which helps the headset display accurate, crisp colors. This talk also spotlights Asynchronous Spacewarp, which allows the headset to preserve resources by running applications at half rate without sacrificing performance. See more details on the Oculus blog.