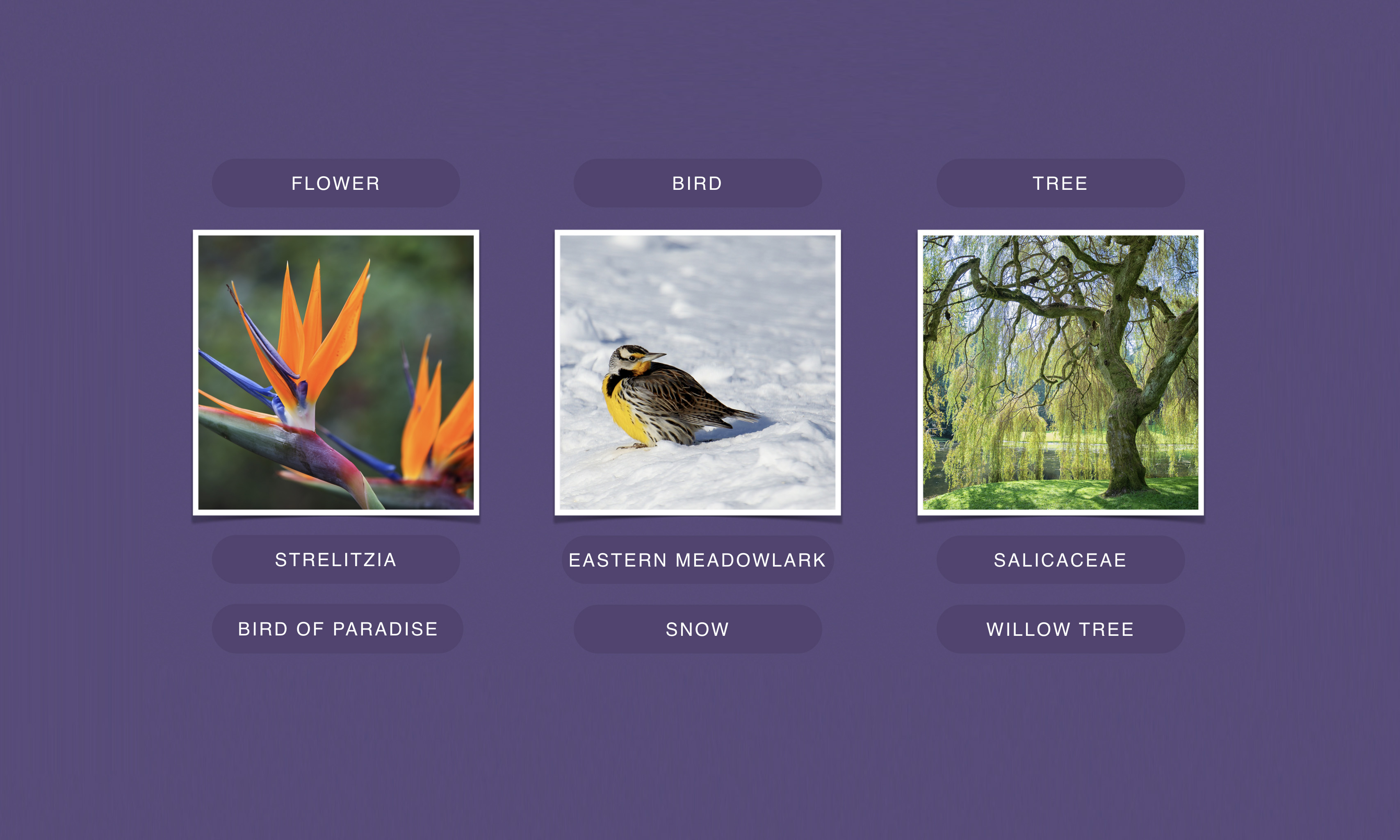

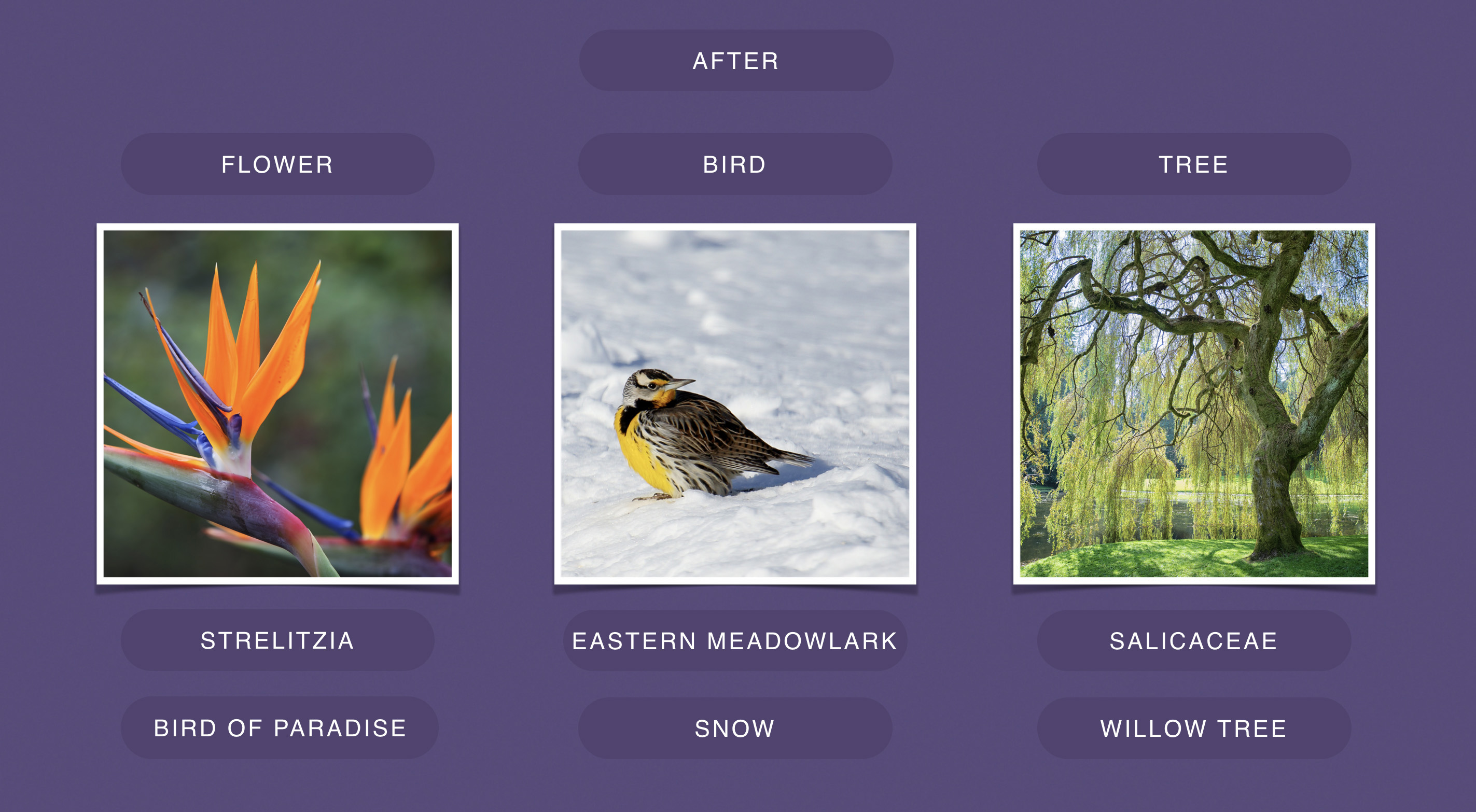

Image recognition is one of the pillars of AI research and an area of focus for Facebook. Our researchers and engineers aim to push the boundaries of computer vision and then apply that work to benefit people in the real world — for example, using AI to generate audio captions of photos for visually impaired users. In order to improve these computer vision systems and train them to consistently recognize and classify a wide range of objects, we need data sets with billions of images instead of just millions, as is common today.

Since current models are typically trained on pieces of data that are individually labeled by human annotators, boosting recognition isn’t as simple as throwing more images at these systems. This labor-intensive supervised learning process often yields the best performance results, but hand-labeled data sets are already nearing their functional limits in terms of size. Facebook is training some models on as many as 50 million images, and scaling up to billions of training images is unfeasible when all supervision is supplied by hand.

Our researchers and engineers have addressed this by training image recognition networks on large sets of public images with hashtags, the biggest of which included 3.5 billion images and 17,000 hashtags. The crux of this approach is using existing, public, user-supplied hashtags as labels instead of manually categorizing each picture. This approach has worked well in our testing. By training our computer vision system with a 1 billion-image version of this data set, we achieved a record-high score — 85.4 percent accuracy — on ImageNet, a common benchmarking tool. Along with enabling this genuine breakthrough in image recognition performance, this research offers important insight into how to shift from supervised to weakly supervised training, where we use existing labels — in this case, hashtags — rather than ones that are chosen and applied specifically for AI training. We plan to open source the embeddings of these models in the future, so the research community at large can use and build on these representations for high-level tasks.

Using hashtags at scale

Since people often caption their photos with hashtags, we believed they’d be an ideal source of training data for models. It also allowed us to use hashtags as they were always intended: to make images more accessible, based on what people assume others will find relevant.

But hashtags often reference nonvisual concepts, such as #tbt for “throwback Thursday.” Or they are vague, such as the tag #party, which could describe an activity, a setting, or both. For image recognition purposes, tags function as weakly supervised data, and vague and/or irrelevant hashtags appear as incoherent label noise that can confuse deep learning models.

These noisy labels were central to our large-scale training work, so we developed new approaches that are tailored for doing image recognition experiments using hashtag supervision. That included dealing with multiple labels per image (since people who add hashtags tend to use more than one), sorting through hashtag synonyms, and balancing the influence of frequent hashtags and rare ones. To make the labels useful for image recognition training, the team trained a large-scale hashtag prediction model. This approach showed excellent transfer learning results, meaning the image classifications that the model produced were widely applicable to other AI systems. This new work builds on previous research at Facebook including investigations of image classification based on user comments, hashtags, and videos. This new exploration of weakly supervised learning was a broad collaboration that included Facebook’s Applied Machine Learning (AML) and Facebook Artificial Intelligence Research (FAIR).

Breaking new ground in scale and performance

Since a single machine would have taken more than a year to complete the model training, we created a way to distribute the task across up to 336 GPUs, shortening the total training time to just a few weeks. With ever-larger model sizes — the biggest in this research is a ResNeXt 101-32x48d with over 861 million parameters — such distributed training is increasingly important. In addition, we designed a method for removing duplicates to ensure we don’t accidentally train our models on images that we want to evaluate them on, a problem that plagues similar research in this area.

Though we had hoped to see performance gains in image recognition, the results were surprising. On the ImageNet image recognition benchmark — one of the most common benchmarks in the field — our best model achieved 85.4 percent accuracy by training on 1 billion images with a vocabulary of 1,500 hashtags. That’s the highest ImageNet benchmark accuracy to date and a 2 percent increase over that of the previous state-of-the-art model. Factoring out the impact of the convolutional-network architecture, the observed performance boosts are even more significant: The use of billions of images along with hashtags for deep learning leads to relative improvements of up to 22.5 percent.

On another major benchmark, the COCO object-detection challenge, we found that using hashtags for pretraining can boost the average precision of a model by more than 2 percent.

These are foundational improvements to image recognition and object detection, representing a step forward for computer vision. But our experiments also revealed specific opportunities and challenges related to both large-scale training and noisy labels.

For example, although increasing the size of the training data set is worthwhile, it may be at least as important to select a set of hashtags that matches the specific recognition task. We achieved better performance by training on 1 billion images with 1,500 hashtags that were matched with the classes in the ImageNet data set than we did by training on the same number of images with all 17,000 hashtags. On the other hand, for tasks with greater visual variety, the performance improvements of models trained with 17,000 hashtags became much more pronounced, indicating that we should increase the number of hashtags in our future training.

Increasing the volume of training data is generally good for image classification. But it can create new problems, including an apparent drop in the ability to localize objects within an image. We also observed that our largest models are still underutilizing the benefits of a 3.5 billion-image set, suggesting that we should train on even bigger models.

The larger-scale, self-labeled future of image recognition

An important result of this study, even more than the broad gains in image recognition, is confirmation that training computer vision models on hashtags can work at all. While we used some basic techniques that merge similar hashtags and down-weight others, there was no need for complex “cleaning” procedures to eliminate label noise. Instead, we were able to train our models using hashtags with very few modifications to the training procedure. And size appears to be a benefit here, since networks trained on billions of images were shown to be remarkably resilient to label noise.

In the immediate future, we envision other ways to use hashtags as labels for computer vision. Those could include using AI to better understand video footage or to change how an image is ranked in Facebook feeds. Hashtags could also help systems recognize when an image falls under not only a general category but also a more specific subcategory. For example, an audio caption for a photo that mentions a bird in a tree is useful, but a caption that can pinpoint the exact species, such as a cardinal perched in a sugar maple tree, provides visually impaired users with a significantly better description.

Setting aside the specific use of hashtags, this research still points to the kind of broad image recognition improvements that could impact both new and existing products. More accurate models might improve the way we resurface Memories on Facebook, for example. And the research points to long-term implications related to the promise of weakly supervised data. As training data sets get larger, the need for weakly supervised — and, in the longer term, unsupervised — learning will become increasingly vital. Understanding how to offset the disadvantages of noisier, less curated labels is critical to building and using larger-scale training sets.

This study is described in greater detail in “Exploring the Limits of Weakly Supervised Pretraining” by Dhruv Mahajan, Ross Girshick, Vignesh Ramanathan, Kaiming He, Manohar Paluri, Yixuan Li, Ashwin Bharambe, and Laurens van der Maaten. Since it involves a first-of-its-kind level of scale, the observations detailed in this paper will pave the way for a range of new research directions, including the need to develop a new generation of deep learning models that are complex enough to effectively learn from billions of images.

The work also suggests that, as widely used as benchmarks such as ImageNet are, we need to develop new benchmarks that allow us to better gauge the quality and limitations of today’s image recognition systems and the larger, less supervised ones to come.